Stop Prompting. Start Architecting.

Why the "Prompt" is a Mirage and How to Build a True AI-Native Operating System

The Seminal Guide to Context Engineering

I still remember the first time it happened—that electric moment when AI just worked. I asked ChatGPT a complex question, expecting another stream of generic answers. What came back was crisp, structured, eerily intelligent. For a second, I thought I was staring at the future.

A week later, I tried the same experiment—and it collapsed. Same model. Same prompt. Completely different results. The illusion cracked. I wasn’t working with intelligence. I was working with amnesia.

That’s when I realized: the first wave of AI wasn’t intelligence at all. It was eloquence without memory. We’d mistaken beautiful sentences for understanding.

Part 1: The Mirage of the Prompt Era

The Age of “Vibe Coding”

Every revolution begins with a misunderstanding.

For the past two years, we’ve been obsessed with prompting—the idea that if we could master the right phrasing, we could unlock artificial intelligence. “Prompt engineering” became a new literacy. Entire careers formed around clever inputs.

It felt creative, but it wasn’t durable.

That phase was vibe coding—an era of improvisation dressed up as engineering. We crafted ornate keys for locks that changed shape with every use case. It produced flashes of brilliance but left no foundation.

Intelligence doesn’t emerge from tricking a model. It emerges from designing its world.

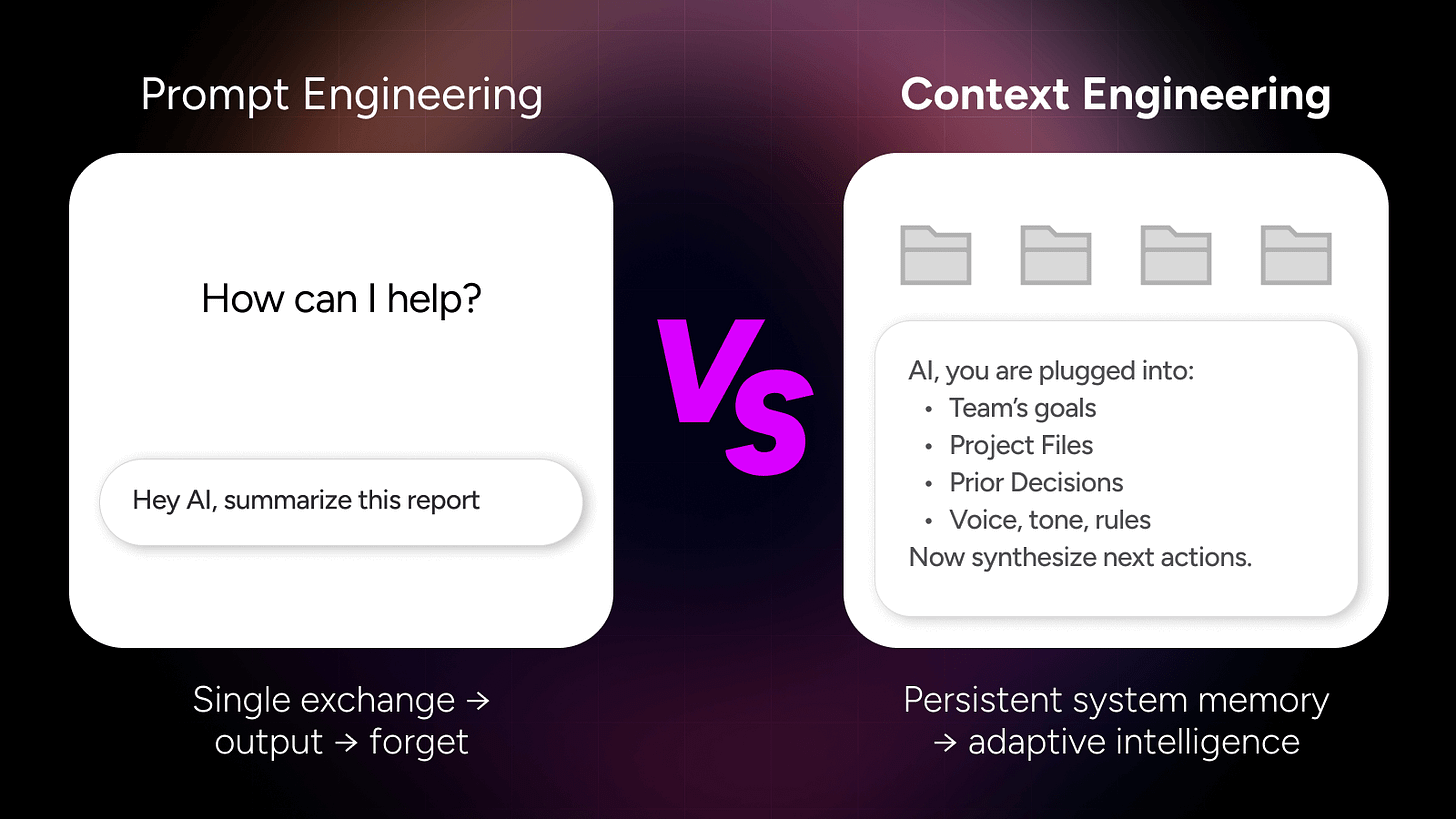

The field is now evolving from prompt engineering to context engineering. This isn’t a rebrand—it’s a reformation. It’s the shift from asking questions to constructing consciousness.

From Commands to Context

Andrej Karpathy gave us the metaphor that defines this era.

The LLM is a CPU—a general-purpose processor. The context window is its RAM—fast, limited, volatile.

If that’s true, then context engineering is the operating system—the discipline of building the environment in which intelligence runs.

The prompt is no longer the star. It’s just a command. The real magic lies in the context—the full ecosystem of information, rules, and relationships that shape how the model thinks.

When you design context, you’re not telling AI what to say. You’re defining what it knows—and, by extension, what it can become.

The Deeper Problem: Corporate Drag

The obsession with prompting was a symptom of a deeper disease: Corporate Drag.

It’s the bureaucratic friction, the meeting sprawl, the endless decks, the sheer gravitational force of misalignment that kills speed and creativity in every modern company. It’s the gap between a leader’s vision and a team’s ability to execute it.

We tried to solve this human problem with a technical patch. We gave our teams a powerful new engine—the LLM—but we chained it to a broken, industrial-era chassis. The result was predictable: we spun our wheels faster but went nowhere new.

The problem was never the engine. It was the operating system.

The Solution: The Shared Brain

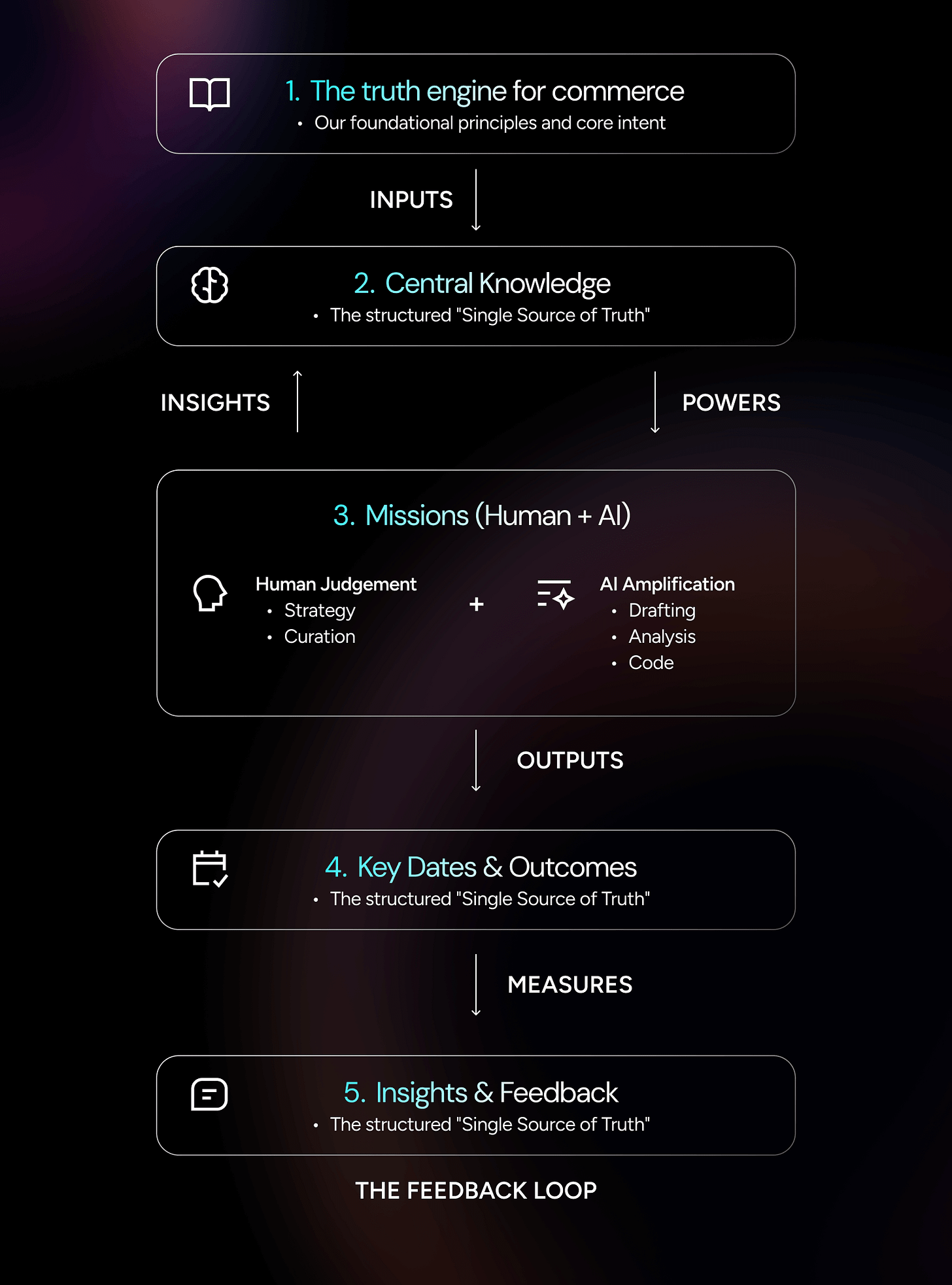

At Demand.io, we stopped trying to optimize the old system. We built a new one.

We operate as a fundamentally context-engineering-first company. Our internal system, AIOS (AI Operating System), unifies every strategy, artifact, and decision into one living context layer. Every person and every AI agent reads from—and writes back to—the same source of truth.

It feels like the company is thinking with one brain. Because it is.

This isn’t a theoretical exercise. It’s our daily reality. Last week, the ChatGPT App Store launched, creating a paradigm shift in our market. In a traditional company, pivoting to capture such an opportunity would take weeks of meetings, decks, and debate.

We did it in one day.

From my morning realization to a fully re-aligned company executing on a new Master Plan took less than eight hours. I updated the core grounding files that define our strategy. That new context rippled through the AIOS instantly. Every team member could pull down the changes, see what it meant for them, and begin updating their own domains.

That is the magic of a shared brain. It’s not just efficient—it’s a state of instantaneous coordination and alignment that feels almost telepathic.

Part 2: The Blueprint for Architecting Minds

Selling the “what” and “why” is easy. It’s an inspiring manifesto. But to actually build the future, you need the blueprints. You need to understand the “how.”

A true Context Engineer is a multi-disciplinary architect. They understand the physics of this new medium, the technology stack required, the organizational structure it demands, and the operational workflows it enables.

This is the blueprint for that system.

Layer 1: The Core Physics of Context

Before you can build, you must understand the “laws of physics” of the medium you’re working in. Context is not just “data.” It is a non-uniform, hyper-compressible, and decaying computational resource.

Context is a Probabilistic Lever, Not “Data.” Context is the minimal, task-conditioned set of facts that, when presented, most increases the probability of the correct next agent action. Our job is not to find data; it is to find the precise, minimal lever that achieves the desired outcome.

Latency is Prefill-Dominated. Total latency = Time To First Token (TTFT) + (Time Per Output Token × tokens). As context windows grow, the initial prefill phase (TTFT)—the time it takes to “load” the context into the model’s memory—dominates the entire user experience.

Context is Not Flat (Positional Decay). The context window is not a perfect memory plane. It exhibits a U-shaped performance curve where information in the middle is often ignored or “lost”. Information placement is as critical as its content.

Context is Hyper-Compressible. The model’s internal representation of context (the KV cache) is massively redundant. It can often be compressed by 50-95% with negligible loss of accuracy. Architecting for compression is a core competency.

Layer 2: The AI-Native Technology Stack

An operating system requires a new technology stack. The old stack was built to retrieve documents for humans to read. The new stack must retrieve facts for an agent to reason with.

Hybrid Retrieval is Non-Negotiable. The “Vector RAG vs. Graph RAG” debate is a false dichotomy. Vector search is for semantic similarity (”what feels like this?”). Graph search is for precision (”what is this?”). A production-grade system must be Graph-first for precision, with a Vector fallback for similarity.

The Strategic Asset is the Living Context Portfolio (LCP). Models are a commoditizing compute layer. The durable strategic asset is your “memory-first” Living Context Portfolio—the curated, governed, and interconnected graph of your company’s facts, rules, and relationships.

Prompts are Programs, Not Strings. “Zero-copy prompt assembly” is the correct pattern. A prompt is not a static string of text. It is a program—a template rendered at runtime that assembles the final context packet from references to version-controlled Context Objects, policies, and tool definitions.

Layer 3: The AI-Native Organizational Structure

You cannot build an AI-native system with an industrial-era org chart. The flow of information is the new org chart. When “context optimization” becomes the physics of your company, your organizational design must reflect that reality.

Information Architecture is Load-Bearing Org Structure. The company’s information architecture is no longer a support function. It is the visible, load-bearing structure of the organization itself. How context flows is the org chart.

The New Bottleneck is Human Evaluation. System throughput is no longer capped by communication or management. It is capped by the “Human-in-the-Loop Ceiling”—the finite cognitive bandwidth of humans to evaluate agent outputs and process exceptions.

The Immutable Roles Emerge. This new structure demands a set of new, immutable roles that do not exist in traditional companies. The most valuable humans are those who manage agency itself.

The Context Engineer: The owner of the context supply chain. Designs the AI’s reality by curating, architecting, and governing the 99.9% of context that is not the user’s prompt.

The Agent Pilot/Supervisor: The operator of autonomy and risk. Manages the “flight” of agents, orchestrates swarms, and handles all human handoffs and escalations.

The Toolsmith: The builder of the toolchain (APIs) that agents use to act on the world. This role defines the actual capability of the entire system.

The Policy & Risk Engineer: The translator of abstract legal and ethical rules into executable guardrails and hard constraints that govern agent behavior.

The Evaluations Engineer: The builder of the “golden sets,” scorecards, and automated systems to measure agent performance, drift, and quality.

Layer 4: The AI-Native Operational Workflow

When you have a new stack and a new team structure, the final piece is a new way to work. Rigid, human-driven processes are replaced by automated, context-driven protocols.

Goals Replace Tasks; Intent Replaces Process. Humans no longer execute pre-defined tasks. They provide intent (a goal, constraints, and success criteria), and the system autonomously decomposes that intent into an execution plan.

The “Execute-Critique-Repair” (ECR) Loop is the Engine of Work. The fundamental unit of execution is a three-agent loop: a “Doer” produces an artifact, a “Critic” runs the artifact against its quality contract, and a “Fixer” patches the gaps. This loop runs until the evidence bundle passes.

The “Stand-Up” is an Asynchronous Anomaly Alert. The daily stand-up meeting is obsolete. It is replaced by an asynchronous, auto-generated state snapshot that fires an alert only when a blocker, risk, or deviation is detected.

Requirements Engineering is the New Primary Bottleneck. When implementation is free and instant, the primary organizational bottleneck becomes the clarity of the intent. The ability to precisely define what to build becomes the most valuable human skill in the entire company.

Part 3: The New World

The Hidden Bottleneck: Context Integrity

Even as the architecture matures, our tools remain primitive. Developers are still building minds blindfolded.

We can’t inspect what a model “remembers.”

We can’t trace how context mutates over time.

We can’t see where it drifts into error or decay.

This opacity creates predictable pathologies:

Context Poisoning: Irrelevant or contradictory data contaminates reasoning.

Context Rot: Long-running systems accumulate stale, redundant, or low-signal data until they collapse under their own weight.

Bigger models don’t fix this. More GPUs don’t fix this.

The constraint is context integrity—the ability to maintain a clean, coherent, and trustworthy internal state. Until we master that, AI will remain a brilliant savant with a memory problem.

The Rise of the Context Engineer

Inside this new system, the most valuable human role isn’t the prompt whisperer. It’s the Context Engineer—the person who designs the informational and cultural scaffolding that makes intelligence reliable, truthful, and aligned.

A Context Engineer doesn’t write prompts. They build worlds. They are the CEO of their domain.

At Demand.io, this isn’t a title—it’s our default way of operating. Every person here is a Context Engineer, owning a domain of intelligence that compounds the shared brain we call AIOS.

The Product Context Engineer architects frameworks that guide agents as they design, test, and ship features.

The Systems Context Engineer builds the infrastructure that keeps our shared brain stable and fast—engineering the code pathways, APIs, and integrations that turn strategy into executable intelligence.

The Growth Context Engineer shapes the informational environment that unites product, brand, and community into a coherent narrative.

The People Context Engineer tends the culture itself—curating the Codex, aligning rituals, and preserving the first principles that keep the system human.

Together, these domains form the synapses of our organizational mind. When one person learns, the company learns. When one context improves, every agent gets smarter. This is what replaces management layers and meeting sprawl: a structure where every operator behaves like the CEO of their domain, and alignment happens at the speed of context.

In the factory, people executed tasks. In the studio, they engineer understanding.

The Final Frontier: Architecting Truth

The next revolution in AI isn’t just organizational—it’s philosophical.

Most companies treat context engineering as a filtering problem: how to manage the noisy, unreliable information of the open web. That’s too small a goal. The real opportunity is to move beyond passively filtering noise to proactively creating a verified, stateful truth layer.

This is the work we are doing with ShopGraph, our Commerce Truth Engine. While other systems use RAG to retrieve information from the messy, probabilistic web, our agents retrieve from a stateful reasoning brain that adjudicates truth, not just relevance.

This is the final shift: from engineering context to architecting truth.

When you build an organization this way, something unexpected happens. The lines between human and machine blur—not in a dystopian way, but in a deeply cooperative one. People stop fighting the system and start thinking with it. AI doesn’t replace judgment; it magnifies it.

The result isn’t less humanity. It’s more. We spend less time in meetings and more time in flow. We argue less about logistics and more about ideas. The magic isn’t the technology. It’s the feeling of being connected.

The first era of AI was defined by fascination with the interface—the chat box, the syntax, the magic words. The next era will be defined by mastery of the environment—the operating system in which intelligence lives.

We are leaving the age of prompting. We are entering the age of architecting. The future will belong to those who design systems of shared understanding—who build not just better models, but better minds.