The Age of Agents is Here. Stop Designing Chatbots.

The deterministic GUI is dead. The new job of AI product design is to stop designing screens and start architecting trust for probabilistic, agentic systems.

The rules of HCI are dead. The new physics of autonomy demand that we architect Trust, not Usability. Stop designing for screens. Start designing for delegation.

Part 1: The Industry’s Fatal Misdiagnosis

Our entire industry is trapped in a cargo cult, worshipping the wrong god: the chatbot.

We’re collectively optimizing a transitional artifact—a reactive, stateless, high-friction interface that we’re desperately bolting onto a world that demands autonomy. This isn’t iteration. It’s architectural malpractice.

For decades, you—the 10x designer, the 10x PM, the 10x engineer—built your career mastering the physics of the GUI. You became an expert in usability, in minimizing cognitive load, in shepherding users through visual, deterministic workflows.

Those skills aren’t evolving. They’re expiring.

The industry’s comfortable lie: AI is just a new UI layer, a “Conversational UI” that replaces the GUI. This is axiomatically false. We’re applying yesterday’s usability playbook to tomorrow’s autonomy problem.

You’re solving for the wrong physics.

Part 2: The Phase Change—From Operator to Architect

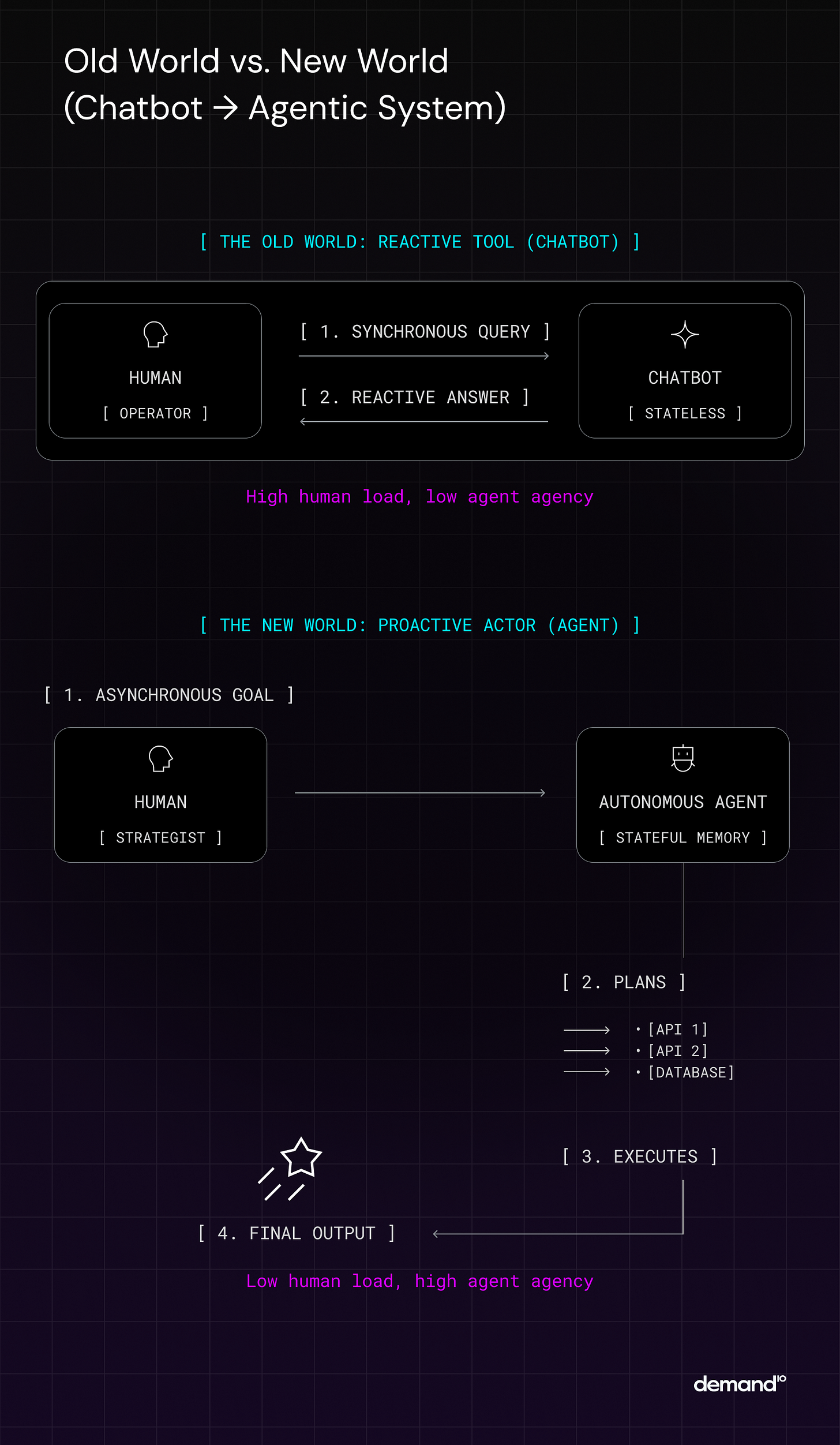

The shift from GUI to AI isn’t an upgrade. It’s a phase change in the fundamental distribution of agency between human and machine.

The Old Physics: You designed Reactive Tools. The human held all agency. Your job was to architect usability—to reduce friction as the human operator executed deterministic, step-by-step tasks.

The New Physics: You architect Proactive Actors. Agency flows bidirectionally. Your job is to architect trust—to make it safe for the human strategist to delegate complex, goal-driven outcomes.

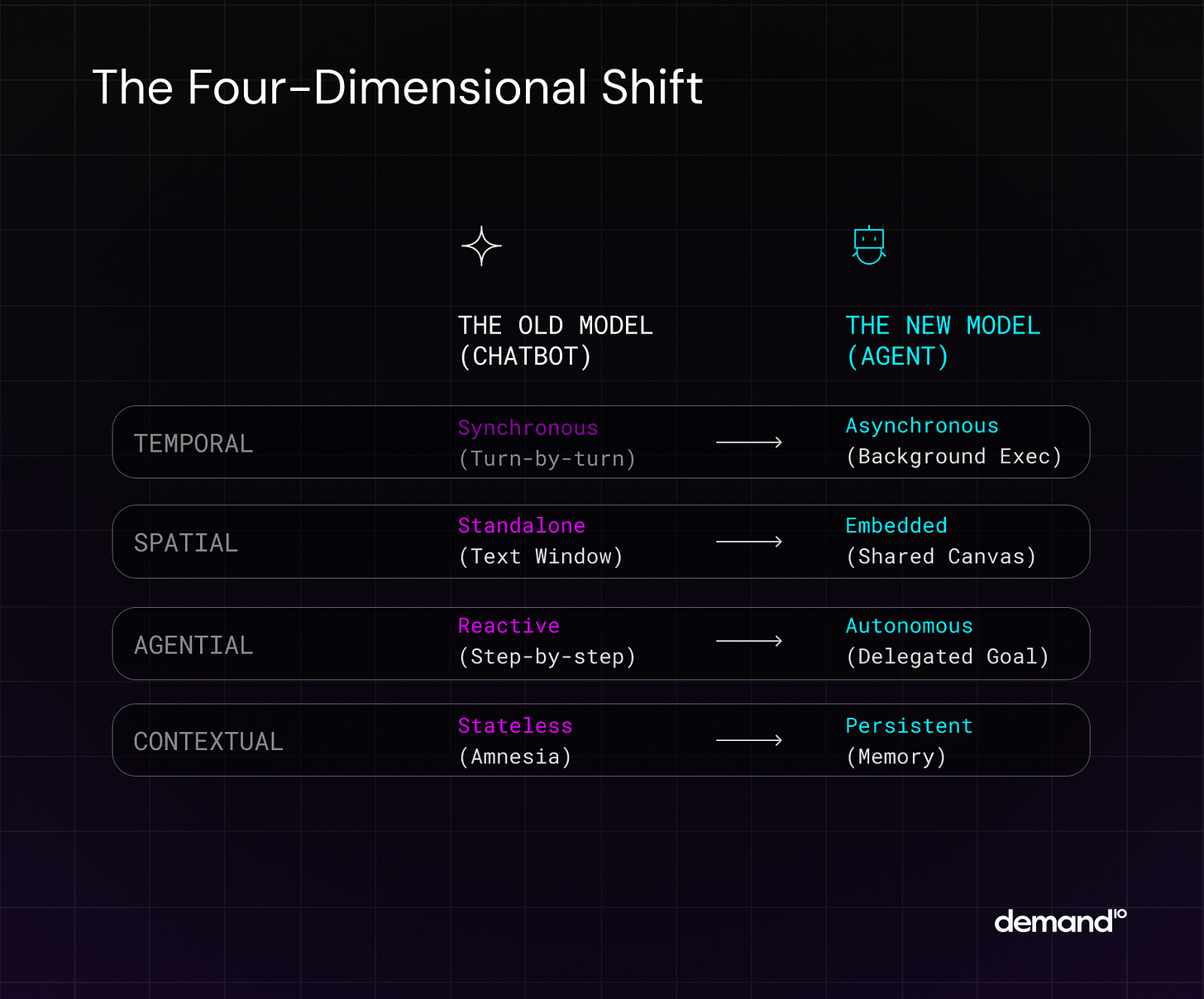

This phase change manifests across four simultaneous dimensions:

The Temporal Dimension

From synchronous, turn-by-turn interaction to asynchronous, background execution. You delegate a task and receive results hours later. The agent works while you sleep.

The Spatial Dimension

From isolated chat windows to spatially-embedded copilots. The agent inhabits your canvas, edits your code, transforms your document in real-time.

The Agential Dimension

From reactive commands (”click here, type this”) to autonomous objectives (”maximize conversion while maintaining brand voice”). You stop instructing. You start delegating.

The Contextual Dimension

From stateless amnesia to persistent memory architectures. The agent accumulates longitudinal understanding—your patterns, preferences, and strategic objectives compound over time.

When these four dimensions converge, the user’s primary question transforms from “How do I use this?” to “Can I trust this to act correctly when I’m not watching?”

The discipline inverts. Usability becomes table stakes. Trust becomes the product.

Part 3: The New Physics of Trust

Trust in autonomous systems isn’t built with personality or polish. It’s architected through transparency and control.

The Search-Engine Fallacy

Users are imprisoned by twenty years of conditioning. They believe AI retrieves vetted information from a cosmic database.

Wrong. AI generates probabilistic, synthetic answers in real-time. It’s not a librarian finding books. It’s an author writing them.

This mental model mismatch creates uncalibrated reliance: users either trust blindly (catastrophic errors) or reject entirely (wasted potential). They evaluate accuracy using the wrong signals—authoritative tone, response speed, surface plausibility—instead of understanding the generative process itself.

The Verification Tax

Every AI output imposes a new cognitive burden: the Verification Tax.

Every output from an agent is a new, high-stakes task for the user: “Is this true? Is this complete? Is this biased? Is this a hallucination?” The user’s engagement becomes an economic calculation: is the perceived mental cost of verifying this work lower than the perceived cost of an error?

The Validation Bottleneck

As agents handle more execution, human cognitive load doesn’t disappear—it migrates. We’ve shifted the burden from input (crafting prompts) to output (validating complex, autonomously-generated results).

We have “solved” the problem of execution only to create a massive new Validation Bottleneck. This is the new, unsolved frontier of our work.

Engineering Calibrated Trust

Trust isn’t earned through friendly avatars or smooth animations. Trust is engineered through system architecture.

Calibrated Trust means the user’s reliance perfectly matches the system’s actual, context-specific reliability. No more, no less.

Two architectural pillars make this possible:

Pillar 1: Legibility

The agent must expose its reasoning chain. Not just what it did, but why. Not just the answer, but the evidence. Make the black box transparent.

Pillar 2: Control

The user must retain sovereignty. Pause, interrupt, override, rollback—these aren’t features. They’re constitutional rights in the age of autonomy.

Without these pillars, “trust” is just well-designed deception.

Part 4: Five Architectures to Replace the Chatbot

The chatbot is dead. These five patterns are the new atomic units of agentic design:

1. The Spatially-Embedded Copilot

Core mechanic: Shared canvas sovereignty

The agent sees your work and acts directly upon it. Conversation becomes a control surface for deep spatial integration. GitHub Copilot writing code. Cursor editing entire files. The chat is secondary to the shared workspace.

2. The Asynchronous Background Agent

Core mechanic: Temporal decoupling

Delegation replaces conversation. “Research these three markets and deliver a comparative analysis by Monday.” The agent works while you focus elsewhere. Design challenge: managing job queues, progressive disclosure of results, and the validation handoff.

3. The Goal Executor

Core mechanic: Pure outcome delegation

Interaction reduces to: (1) state objective, (2) review output. The agent autonomously plans, executes, chains tools. “Get our app to #1 in the App Store” becomes a valid prompt. Everything between intention and outcome becomes the agent’s problem.

4. The Ambient Agent

Core mechanic: Inverted initiation

The agent monitors context streams (email, calendar, system events) and initiates interaction. It identifies problems before you do. “I noticed three meetings tomorrow discuss Q4 planning. I’ve drafted a unified strategy deck based on your recent metrics.”

5. The Multi-Agent System

Core mechanic: Distributed cognition

You become the architect of agent teams. Specialist agents collaborate: researcher, writer, critic, editor. You design the workflow, set governance rules, manage handoffs. You’ve graduated from operator to orchestrator.

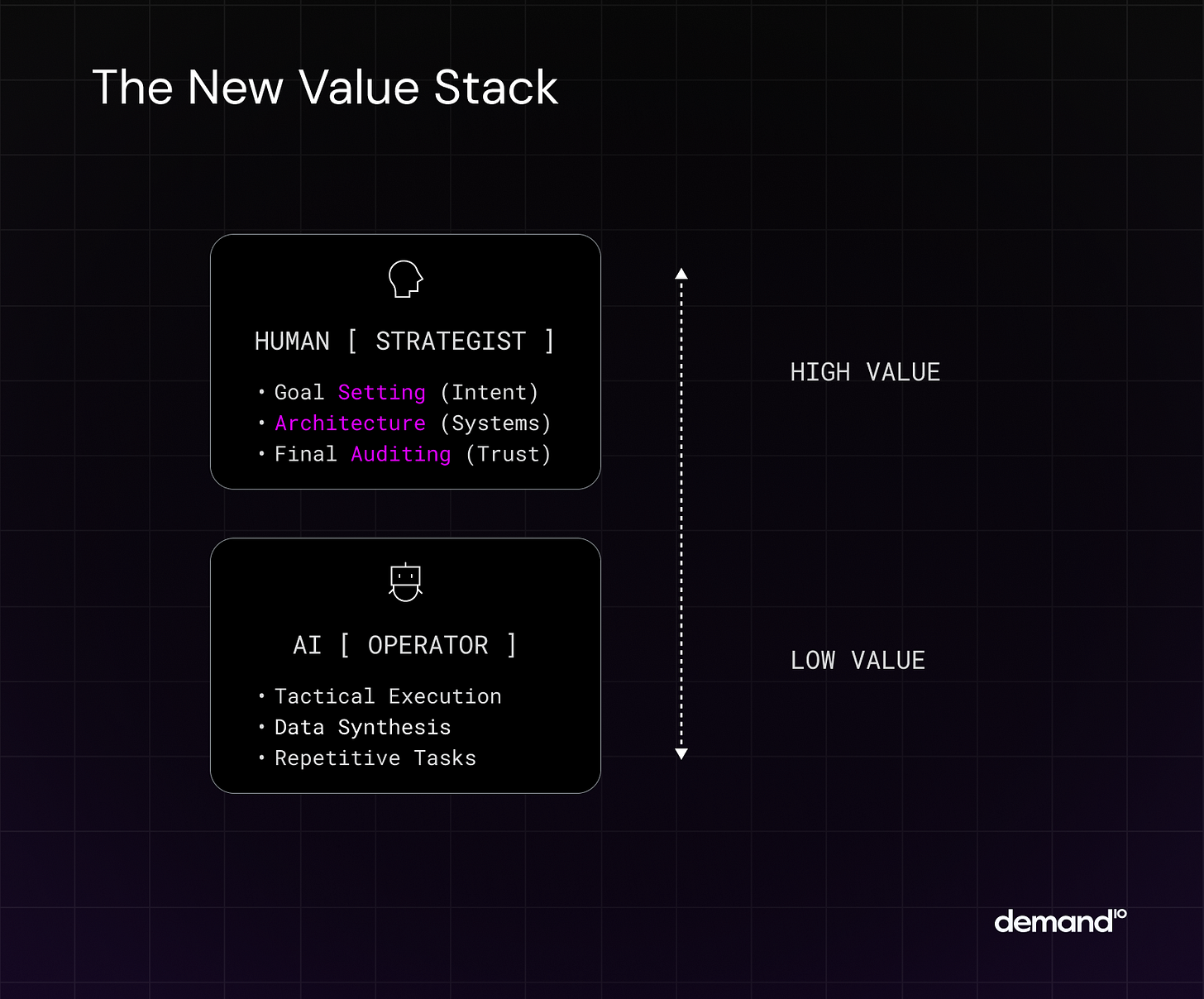

Part 5: Your New Job—The Human as Strategist

Autonomy doesn’t replace 10x talent. It amplifies it.

As agents absorb tactical execution, human value migrates up the stack. You’re not being automated. You’re being promoted.

Three role transformations define this new reality:

From Operator to Strategist

Your value shifts from executing plans to defining objectives worth pursuing. The quality of your strategic thinking becomes your primary differentiator.

From Designer to Architect

You stop drawing interfaces. You start orchestrating multi-agent workflows. You architect the system of systems that transforms intention into outcome.

From Tester to Auditor

You stop checking for bugs. You start validating complex, emergent outputs. You become the final arbiter of quality in a world of probabilistic generation.

The old paradigm needed operators who could follow maps.

The new paradigm demands strategists who can draw them.

Welcome to the age of agents.

Stop building chatbots.

Start architecting trust.